Alumni of University of Bonn

|

Here is a list of publications and projects I worked on during my time as a PhD student.

|

Publications

|

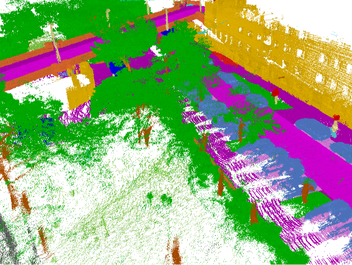

SemanticKITTI: A Dataset for Semantic Segmentation of Point Cloud Sequences

Jens Behley *, Martin Garbade *, Andres Miloto, Jan Quenzel, Sven Behnke, Cyrill Stachniss, Jürgen Gall IEEE International Conference on Computer Vision (ICCV), 2019 * denotes equal contribution (PDF, Video-Dataset, Video-Semantic Scene Completion) |

|

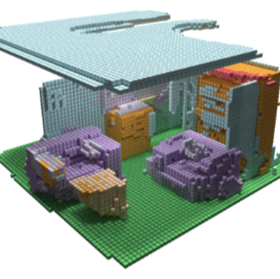

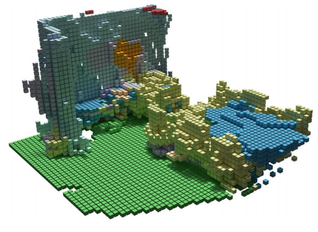

Semantic Scene Completion from a Single Depth Image using Adversarial Training

Yueh-Tung Chen, Martin Garbade, Jürgen Gall IEEE International Conference on Image Processing (ICIP), 2019 (PDF) |

|

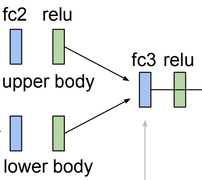

Two Stream 3D Semantic Scene Completion

Martin Garbade, Yueh-Tung Chen, Johann Sawatzky, Jürgen Gall CVPR-Workshop on Multimodal Learning and Applications (MULA), 2019 (PDF) |

|

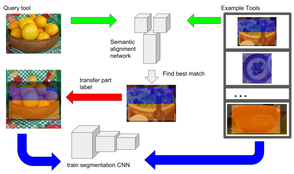

Ex Paucis Plura: Learning Affordance Segmentation from Very Few Examples

Johann Sawatzky, Martin Garbade, Jürgen Gall German Conference on Patern Recognition (GCPR), 2018 (PDF) |

|

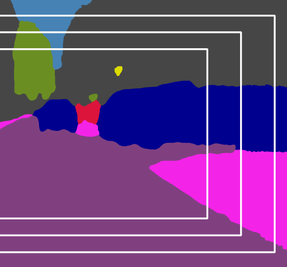

Thinking Outside the Box: Spatial Anticipation of Semantic Categories

Martin Garbade, Jürgen Gall British Machine Vision Conference (BMVC), 2017 (PDF, Images/Data, Talk, Code) |

|

Real-time Semantic Segmentation with Label Propagation

Rasha Sheikh, Martin Garbade, Jürgen Gall ECCV Workshop on Computer Vision for Road Scene Understanding and Autonomous Driving (CVRSUAD'16), Springer, LNCS 9914, 3-14, 2016.©Springer-Verlag (PDF) |

|

Handcrafting vs Deep Learning: An Evaluation of NTraj+ Features for Pose Based Action Recognition

Martin Garbade, Jürgen Gall GCPR Workshop on New Challenges in Neural Computation and Machine Learning (NC2), 2016. (PDF) |

Projects

Goose Detection

Neural network based falcon / goose detection system to protect falcon nests from geese invasion, 2016 - present (German Media Article)

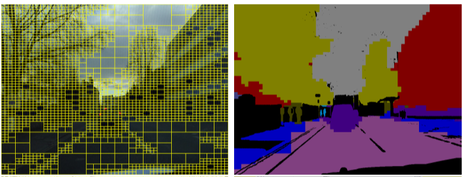

Semantic Scene Completion on SemanticKITTI

Neural network based road scene anticipation for anticipatory driving (German Media Article)

Input: Single Laser scan |

Output: Completed Scene |